This is "part one" of two articles about setting a QA process in a startup. Find part 2 here: Lightweight QA strategies for startups that care about quality.

"QA" and "process" in the same sentence with "startup"? What about "move fast and break things" and all that?

Have you ever heard that startups change every quarter? They do. However, even with such a fast-paced environment, you can have both (QA and process) if you adapt continuously and keep things lightweight.

Oh, and if you’re an orthodox believer of the motto above, know one thing: sometimes, if you move too fast and break too many things, your customers get angry. Very angry.

Our key achievements

My team and I build a QA process from scratch. We wouldn’t achieve that without enormous support from other departments, and therefore, this is a success story of many people.

Over several months we experimented with many forms of QA: manual testing, automation, mixed testing, fixed monthly releases, continuous deployment, and others. That gave me a chance to look at each of those approaches and share the lessons I learned with you.

As a result of establishing a QA process, we successfully:

- prevented significant production regressions,

- helped to rebuild confidence in the product’s quality,

- improved a release process,

- contributed to on-time releases,

- built a full QA process,

- made customers happier :),

- helped to implement continuous deployment.

This article explains the path we went through and gives hints on making the right decisions.

Let’s travel back in time to mid-2019…

Rewind

It was an early Autumn, and the company I was working at was undergoing a major engineering reorganization. My role at that point was leading a remote team of engineers distributed across Europe. Little did I know that the hat I used to wear was about to be changed.

The CEO of the company had identified the need to get the product quality under a more structured and organized control. He asked me to take the lead of a yet-to-be-born QA department.

For someone like me who thrives on solving problems in various domains, it was fascinating to pick up the challenge.

The primary rationale that guided me was that customers expect stability, predictability, and quality after the initial "wow" moment your product delivers.

Know where you are

Have you ever been to one of the "escape room" games? If not, it’s a physical game where you’re locked down in a room, and your task is to solve multiple puzzles hidden in the area to open a closed door and escape.

So what do you do first? You look around and establish where you are!

That’s precisely the first thing you can do when trying to set a QA process. See what’s around and what’s already available.

Before you start envisioning and implementing changes, it’s useful to ask yourself some questions first.

Here is a sample checklist grouped into sections.

Org & Awareness

Start with a few simple questions.

- what do people in your company know about QA?

- do they understand its role and place in the organization?

- do people understand the value that QA adds to the business?

Learning those can tell you what the people’s expectations towards QA are and whether there are any early signs of potential future conflicts. For example, some people may think that the QA’s role is merely finding bugs; others may think its function is to write automated e2e tests and nothing besides that. Knowing what other people think about QA will help you better communicate your plan.

The last question is crucial, especially at startups. Given scarce resources, startups can’t afford things that don’t add value to their business. If your company doesn’t understand "why" QA exists, you will have a hard time convincing anybody to do anything related to QA.

Bugs / Defects / Quality

The other area you can focus on is how you currently manage and measure quality, particularly with regards to bugs and defects.

- how do you measure quality? What does quality mean to you? Is it just a quantity of defects? Is it "meeting acceptance criteria"? is it performance? Is it UX?

- how do you report bugs? Do you track their number?

- how many production regressions do you have? What’s their severity?

- how many regression get caught before they get to production?

- how do you deal with hotfixes in production?

The number of reported (production) defects/regressions in the systems is one of the key performance indicators for QA. If you can prove that this number goes down as a result of QA’s efforts, you prove QA’s business value. Less bugs = less angry customers = more retention = more money.

E2E Test Cases

Writing and running end to end test cases is one of the QA’s main jobs. As a QA at a startup, you need to balance between what you should and what you can test, given the limited resources you have.

- how is the app tested currently? Unit tests, integration, e2e?

- do you have e2e automation? What framework do you use?

- do you test manually? How do you keep track of manual test cases?

- do you keep track of test runs?

- how are your manual test cases organized?

- what is your e2e test coverage?

- how are the automated e2e tests linked to manual test cases?

- how is writing automation planned? How is it prioritized? Who is the key stakeholder?

There are thousands of features in an extensive system. Even a relatively small application can have hundreds of features. Features can have dozens of corner cases and input variants. Some of them cover critical user flows; some of them are merely ever touched by the user. Knowing what’s the state of current test coverage, you can measure whether the right things were tested and should you build something from grounds up.

Infrastructure

QA is not a "solo" game. To get it right, you need several departments to work together, supporting QA. Unless you have the skills and the capacity, you may want to rely on your DevOps team to help you set up the right infrastructure.

- do you have an infrastructure to run "staging"? do you have "integration"?

- what’s the git-flow you’re using?

- do you have CI? do you have/want to have CD?

Understanding your infrastructure can help you plan the necessary work that you may need to delegate to another team. For example, not being able to deploy artifacts to a hosted environment will turn into a time-consuming chore as you will need to run the system locally to test it. That operation may not be trivial and will not reflect the product that the user gets (the standards "works locally, breaks on production" issue).

Product

Your Product Team is yet another department that you will want to be close friends of. They are the people who decide what the shape of the product should be. In a startup, the "Product Team" might be just one dedicated person or even the CEO itself. However, it’s important to distinguish that role as a stakeholder.

- how many features do you have in your system? Are they documented?

- which features are critical to your user? E.g., what are the top 3 things users do when they log into your system?

Knowing the above will help you organize your work. You will know what to test and prioritize testing it. If nothing is documented, you will have to either "explore" features yourself or spend some time with people who build the product to show you features.

Some startups have features hidden under such complex logic that no one in the company remembers them.

Project Management

Depending on how mature your startup is, you may fret hearing words like "Project Manager" but bear with me! These questions are essential because, as a QA, you will need to understand "what" engineers were supposed to build. For example, you may work in a company where engineers come up with user stories and hack features together.

- how are new features/changes requested? Who "owns" that process? How are the acceptance criteria set?

- are engineers building their backlog? Where PRs come from, and who tracks code changes?

If you worked as an engineer at the organization before, you would know exactly how things work. However, if you came from outside, I suggest you take a look at issues and PRs. See if engineers link PRs to issues and if they explain them reasonably well. If the majority of issues in your system look something like (vague title, no description):

Then know one thing: you will have to spend a lot of time working with "whoever owns the project management" and with "engineers" on improving the quality of issue descriptions.

Release

One of the final steps your team will be affected by is the release process. That itself can be very confusing to many people as there are many definitions or a "release". What exactly the "release" means and its relation to QA will be unique to your company.

- how is a release candidate tested? Is it tested at all?

- do you have a release process?

- how often do you release?

- when does the QA test your system "before" the release?

- who is responsible for the release? Do you have a Release Manager?

- do you deploy to SaaS? Do you release "named" versions?

- which versions do you support?

- do you track test runs executed for release candidates?

- what’s your versioning strategy? Semantic versioning? Can you identify the version of the product quickly to report a bug for the right version?

Asking yourself those questions can help you synchronize QA’s efforts with the rest of the company. Depending on your QA plan, you may want to schedule some time for "hardening", where your team manually tests for regressions, for instance. However, if you don’t have a stable release process, you will end up jumping frantically between Slack channels flooded by requests to test pull-requests, explaining when does the next release is about to happen, etc. In extreme cases, you may need to temporarily take the role of Release Manager, and set the release process yourself.

Engineering

Last but not least, the cornerstone of the quality lifecycle: engineering.

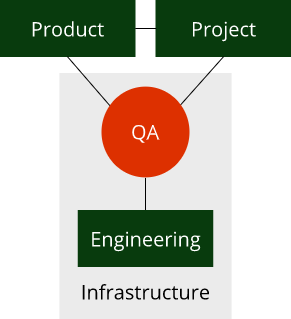

A simplified diagram of where QA sits in the organization may resemble something like:

Engineers are the ones that implement the features requested by the project stakeholder. As a QA, you will help assure that they build the right things, and the quality of what they produce meets the standards.

- how are new features developed? Do they go through any QA?

- how are engineers aligned with QA? Who tests their work? Who assures they build the right stuff?

You may, for instance, find out that engineers implement features (and bug fixes) that no one ever tested. Let’s say the majority of those changes go straight to production without QA.

Risks that this carries include:

- corner cases that engineers missed,

- incomplete features (e.g., an engineer was supposed to add a checkout page with a balance and transaction summary, but they only implemented the balance and forgot about the summary),

- regression caused in other parts of the system that engineers did not know about.

Identify what’s the status quo, and – if you plan to make any changes to the developer’s workflow – make sure to sync with engineering managers. The most challenging thing in QA is changing the organization’s process (people are reluctant to change, and you may need to satisfy the requirements of multiple stakeholders). A lot of your work will be negotiations, communication, strategy.

Summary

Thank you for reading the first part of the article. Please feel free to share if you liked it, and follow me on Twitter to get instant updates.

The next article is about lightweight QA strategies. I will attempt to help you identify the QA role in the organization as well as share some hints about manual and automated testing.